I’ve been listening to podcasts for over 15 years. Back then, ads were host-read and usually relevant. Now? Many shows cram in 15+ minutes of loud, intrusive ads per hour.

So, I built a system to cut most ads from the podcasts I listen to. It outputs an updated feed I can use in my podcast app, Overcast, and in testin,g it removes 95% of ads. Here’s how I got there, what didn’t work, what did, and the trade-offs along the way.

1. The Naïve Approach: One-Shot “Find the Ads”

I started by feeding the full transcript (generated by Whisper) to an LLM with a prompt like:

“Here’s the entire transcript; give me the ad timestamps.”

Result:

It barely worked. The model would return a few timestamps early on, then miss everything else. One-shot prompting wasn’t enough.

2. Splitting up the Problem

Next, I split transcripts into overlapping chunks and asked the model to score each segment for “ad-likelihood.”

Result:

Better, with much more limited input, it could find ad segments later in the podcast, but still flawed:

- It confused “house ads” (self-promotion) with real sponsor ads.

- It sometimes flagged normal intros/outros as ads.

3. Multi-Head Prompting: Specializing by Ad Type

The breakthrough came from asking different questions for different ad types:

- Brand ads: Look for promo codes, URLs, sponsor phrasing.

- House ads / cross-promos: Compare content to show notes. If the segment promotes another podcast or has phrases like “new season out now,” mark it.

- Known ads: Use listener reports to feed back brand/podcast names (plus a hard coded list of the Squarespaces and Better Helps of the world) into the system, biasing future runs.

Result:

Far fewer false positives and much better recall, especially for tricky cross-promos. A much bigger AI bill! Now each ~20-word segment of the transcript is 3 additional LLM requests.

4. Post-Processing: Making the Edits Natural

Once segments were flagged, I applied a few key heuristics:

- Ignore very short detections (<10s) to avoid spurious cuts.

- Merge adjacent detections so multiple ads become a single block.

5. Adding Speaker-Aware Detection

Ad reads often sound different, new cadence, even a new voice.

Using whisperx for diarization let me:

- Detect the change of speaker and give this information to help the model identify ad spots.

- Separate “in-topic host” from “host reading copy.”

This made a big difference for hosts who subtly switch into ad voice and really helped with non-host read ads.

6. Results

Across interviews, news dailies, and narrative shows, the system consistently removes ~95% of ads. On some shows, that’s 15+ minutes saved per hour and I use this every day on some of my favourite feeds (unless I’m already a member and have an ad-free feed).

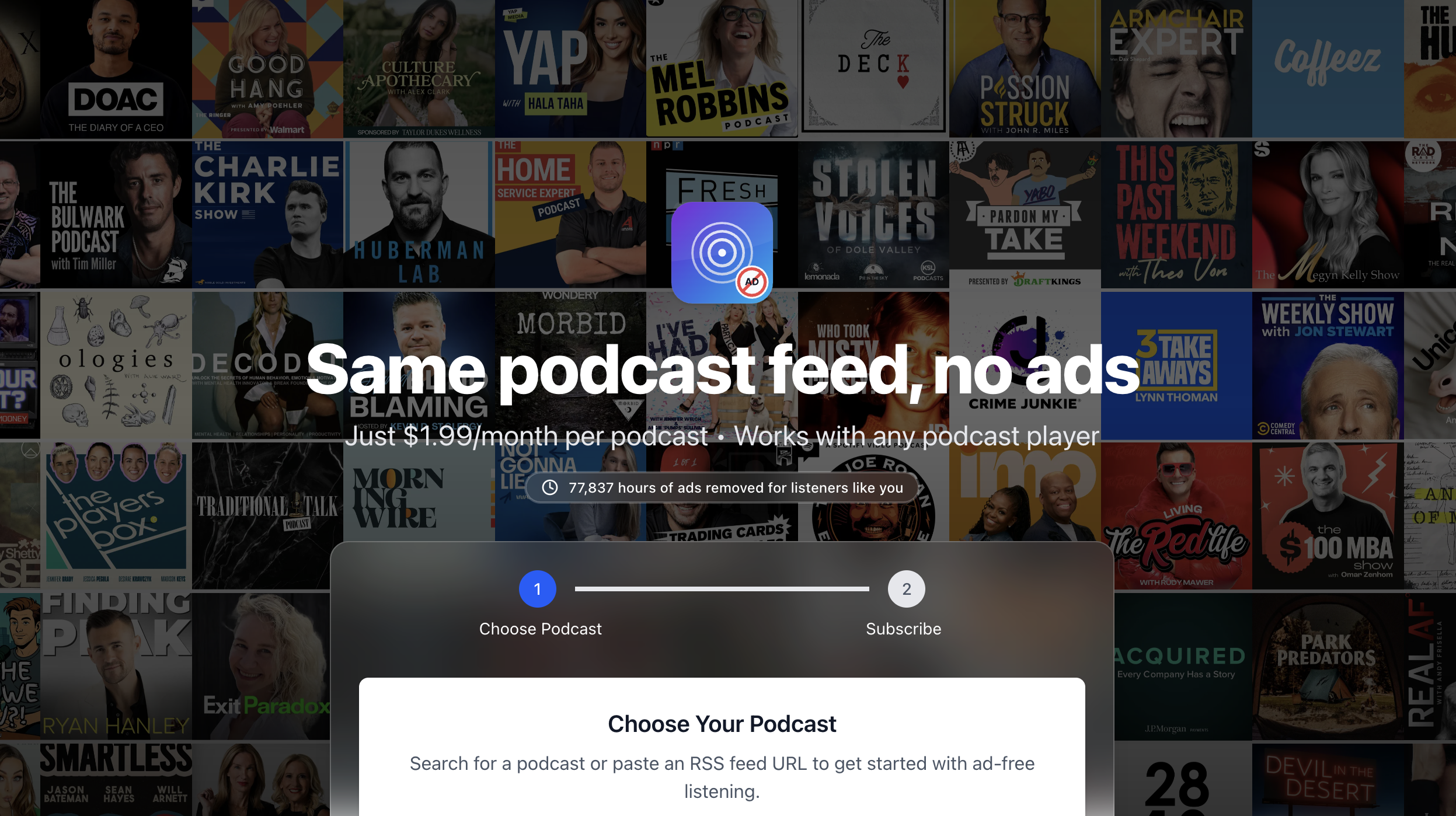

If you’re curious about the productized version, check out PodcastAdBlock.app and DM/email me for a coupon.

7. Cost & Hosting Notes

- My final segmenting scoring approach is a lot more expensive than one-shot prompt approach, but it’s vital for accurate ad blocking IMO.

- Reprocessing episodes after listener reports adds cost too, but after reporting a few of the more tricky ads, this pays off in even better performance.

- Hosting is via Digital Ocean, inference runs on Modal.com and DO agent platform, so I only pay for GPUs by the second.

Happy to dive deeper into the prompting strategies, diarization features, or the reprocessing/feedback loop if folks are interested.